The CRM Grid – A Microsoft CRM Blog

Greg Owens’ rose-tinted MS-CRM mumblingsSocial Pane control not working in custom forms?

What with being so busy with clients, combined with the growth of the CRM community, it’s been a long time since I discovered anything genuinely new that hadn’t already been blogged about elsewhere. Finally, over 18 months since my last post, I found something worth shouting about!

The Social Pane just shows as Notes on custom forms

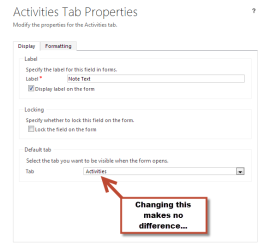

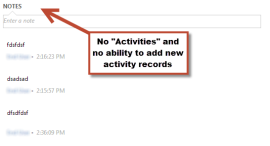

I manage an ISV solution that is developed in CRM Online. The solution began life in the 2011 guise but I’ve recently upgraded to CRM Online 2013 and I’m in the process of redesigning forms. I wanted to use the new “Social Pane” style control that surfaces Activity, Notes and Wall Posts and is best seen on the native “Account” form. Several blog posts will tell you that this control can be added to your form by clicking on the familiar “Notes” button in the Form Designer. The problem was, on my custom forms, the control simply rendered the “Notes” bit, even if I changed its properties to show, for example “Activities” as the “Default Tab”.

“Upgrade your forms to CRM 2013”

The underlying issue of course is that my forms hadn’t been “Upgraded to CRM 2013”. Unlike my initial understanding, “Upgrading to CRM 2013 forms” was more than just “making it look like CRM 2013” by using the 3 column layout and some of the new controls. Never mind the fact that amending the layout and adding the Bing Maps control and using the new SDK methods all work just fine, this is apparently not enough! Instead I must (and I paraphrase) abandon my old form and merge it into another form and then edit it back in line with what I want (see this blog for more details). This seems like a lot to do considering that its possible to get my form looking and behaving 99% the way I want just by using the form designer. In any case, I was unaware of a “problem” until I tried to use the Notes/Social Pane control. The reason for its behaviour is non-intuitive – it’s really unclear why it doesn’t work the same between native and custom forms.

The Solution – set the FormPresentation property

So the fix? Export the form as part of an unmanaged solution, extract the contents then edit the Customization.xml file by finding the FormXml of the form you wish “upgrade”. You’ll need to switch the following attribute from “0” to “1”. After that, save the file, rezip and reimport the solution. Publish and you’re good to go.

//FormXml/forms/systemform/FormPresentation

Club 100 2012 – 60 Minute Challenge (Round 2)

Round 2 (Buckmore Park)

Qualified: 3rd Race: 5th

Weather: Dry, overcast

At last, my 2012 racing season begins. After round in mid-February was rescheduled following snow the Club 100 60 minute challenge took until March 31st to get started. Officially “Round Two”, the race at Buckmore Park in Kent was staged in perfect conditions: a dry track and mostly clear skies. Not too hot but enough warmth to yield some grip on the old tarmac.

Qualifying

The season started better than predicted. With a 36-kart grid, the track was busy during practice and qualifying, so after getting a feel for the kart and trying a few lines out, reading the traffic and looking for a suitable gap was the order of the day. The effort paid off, yielding a career-best 3rd on the grid, just a tenth of a second behind reigning champion Eddie Hall (but sadly 3 tenths off local-boy Andrew Johnson).

The Race

Last year my starts were simply too cautious and this alone cost me several places at many races – definitely an area for improvement! Evidently it’s much easier to get a solid start from the front of the grid and consequently, 2nd place beckoned as a gap opened up into turn one. Sadly, the tighter line on cooler tyres forced a slight lift – just enough for Hall to hang on around the outside and hit turn two back in second.

Further back, things were getting busy. Golby, starting from 6th in his first race for two and half year, locked and lost the back end heading into the hairpin at turn 3. This set off a chain reaction that left around 10 karts stalled and set in motion some difficult racing for the front runners too. Strong opening laps from both Johnson and Hall created a gap of over a second but gap would yo-yo several times as those involved in the lap one collision became backmarkers by lap three.

Third place become fourth on lap 5 after a clean move up the inside into turn one by kart 16 and as the out-of-position tail-enders tried to make something of their unlucky starts, racing against the leaders become tricky. If only the blue flags were in use…! By lap 9 Tim Hill made a carbon copy move into turn one and left me in 5th. Things got tricky as I was left battling with a back-marker only a few tenths slower than me but convinced we were racing for position. Several time I made a move only to be retaken and he assumed he had lost a place. The gap to the leaders grew…

The only solution was to make the mandatory as soon as the pit window opened at 20 minutes. It was a reasonable stop – but a slow approach and clumsy exit of the kart cost me 6 seconds over the leaders. As the first to pit, I re-joined in 17th.

Now the racing really began. An aggressive move from Clackett into turn one (possibly assuming it was me, not the guilty backmarker, who nearly took him out of the race a few corners earlier) forced me wide and also opened the door for Pearce to slip through too. Pearce and I would exchange positions several times over the next 40 minutes. After the pit stops were over, I was down in 6th with Pearce just two tenths behind. Pearce would make another move into turn one a few laps later (the Achilles heel of my race!) but together we caught and passed perennial race-winner Glen Beard.

Lap 60, 12 minutes of the race remaining and I made move into the turn two hairpin take regain 4th place. The next 9 places were covered by around 12 seconds as we all battled to take the 4th spot. I pulled a gap of over second to Pearce in 5th but gradually he reined it back. With three minutes to go, we were nose to tail across the line. As we began the penultimate lap, he risked his lift home (yes, we were car sharing for the journey) and pulled a brilliant move to get alongside into turn one. The only option was to yield the position and charge for the last two laps. Alas, I would finish in fifth just two tenths behind Pearce but a career best in this championship. The leaders were over thirty seconds up the road by the chequered flag – gaining a podium this year will be as hard as ever!

A pleasing start to the season, but plenty to improve on ahead of round 3 at Whilton Mill in June 🙂

Click here for the Official Results

SSRS – checking an external image exists

15 July 2011 – Updated with working code.

I’ve just noticed that this post had some comments that it wasn’t working. I fear it may never have worked. I’ve reviewed the installation where I deployed this code originally and the working, valid code is now listed below. Apologies to anyone who lost time trying to use the original.

——-

SSRS supports adding external images to your reports. This is useful if, like my client, you have images stored in a SharePoint Document Library. Using some commonly-found code for structuring their document libraries, most accounts have had an image called “main.jpg” uploaded. If “main.jpg” does not exist, there are good business reasons for this.

The client required a report which should include “main.jpg”, if it exists. Otherwise no image should be present at all. The problem is that SSRS is only aware of the URL to the image as a simple string. I used SQL to construct a URL string for each record, but SSRS can’t natively detect whether an image exists at this URL or not. There is a way around this, however. SSRS allows you to embed your own custom code in your report properties. The following code checks whether an image exists at a given URL and returns true or false. This code can be called from an image control’s “Hidden” property, or combined with an expression in the image controls “Value” property to manipulate the URL:

Function IsValid(ByVal Url As String) As Boolean Dim sStream As IO.Stream Dim URLReq As Net.HttpWebRequest Dim URLRes As Net.HttpWebResponse Try URLReq = Net.WebRequest.Create(Url) URLReq.Credentials = System.Net.CredentialCache.DefaultCredentials URLRes = URLReq.GetResponse() sStream = URLRes.GetResponseStream() Dim reader As String = New IO.StreamReader(sStream).ReadToEnd() Return True Catch ex As Exception Return False End Try End Function

To use it, copy the code above to your report properties (Report > Report Properties… > Code), then after inserting an image control (with Image Source of “Web”), add the following code to the Hidden property (Image > Visibility > Hidden > Expression…):

=Code.IsValid(Fields!fieldWithUrlToImage.Value)

Any suggestions, improvements or alternative approaches are welcome!

Is my CRM 2011 server licenced or on trial?

There is no obvious way to determine if you CRM server is using a trial licence key or a full licence key. You can infer if you are on trial or not bu looking in Deployment Manager on the CRM Server.

An unregistered CRM 2011 installation will show a Product ID in Deployment Manager with the format:

XXXXX-010-XXXXXXX-XXXXX

A registered installation will show Product ID in the format:

XXXXX-06X-XXXXXXX-XXXXX

If you want further reassuramce, you can confirm if the key you have installed is a full key by attempting to change it to a trial Licence Key via “Change Product Key” in deployment Manager. If a full key has previously been applied, you will recieve an error if you try to change it to a trial key.

Trial keys:

- Microsoft Dynamics CRM Workgroup Server 2011 (5 CAL limit): H84KC-JH8DF-7PDYK-TXBXR-2RMMT

- Microsoft Dynamics CRM Server 2011 (no CAL limit): MQM2H-JYYRB-RRD6J-8WBBC-CVBD3

Deleting Users in MS CRM 4.0

I have a SQL script (see below) which will allow you to delete a user fully and completely from Microsoft Dynamics CRM 4.0. It is, of course, utterly wrong and unsupported to do this, but still – the script exists and seems to work just fine.

I originally wrote it to carry out some particularly unsupported work with a previous client. Later on, I found use for it on more than one occasion during my own development (specifically when importing clients’ existing databases into my own Virtual Machines).

Some time ago, I pulled the script from here but I can no longer keep up with requests send it on. Consequently you can now find it at the end of this post.

I chose to remove it since even my own tenuous example of how it might be useful turned out to be overengineered. I had suggested that deleting users might be an easy option when importing databases full of users into a development environment, but where you the developer don’t want the overhead of creating AD accounts and then mapping those users. Ivan (comments, below) has correctly pointed out behaviour which I was previously aware of but had slipped my memory: mapping users is not mandatory during the “Import Organisation” process (well, you need to map at least one user, but otherwise…). Despite showing an “Error” during the mapping process, so long as at least one user has been mapped, one can opt to ignore the issue and proceed with the import. Thank you Ivan.

In my opinion, there are very few (if any?) viable reasons to use this script, so I recommend that you exhaust all other options before considering using this script. Be aware that the reason you can’t delete a user in CRM is that there are usually different ways to approach the problem.

Disabled fields incorrectly saving to the CRM database

Earlier this week, I encountered what seems to be a bug in MS CRM 4.0 which I had not noticed before. This occurred with Update Rollup 13 and Update Rollup 14 (and possibly others…?) and has been replicated on at least one other unrelated environment. (Edit: but not replicable on all environments…)

The problem manifests itself with fields marked on the CRM Form Designer as “readonly” being incorrectly saved back to the database, during the create stage. Strangely on affected machines I was unable to replicate this problem when updating records (which behaved as expected).

According to the CRM 4.0 SDK, readonly/disabled fields are not submitted to the server during the save process. After all, that’s what the ForceSubmit method is for (my emphasis):

All enabled fields will be submitted after they have been modified either through script or by user input.

Contrary to this, on creation of a record, data in read-only fields is currently being submitted and saved to the database. I haven’t identified why this occurs on some deployments and not on others. Details of the main environment that I have encountered this on are listed at the end of this posting. To test your environment, try following these steps:

- Create a new entity, “new_test”

- Create a new string attribute, “”new_editablestring”

- Create a new string attribute, “”new_readonlystring”

- Place both fields onto the form

- Mark “new_readonlystring” as Read-Only

- Enable the “onchange” event for “new_editablestring”

- Add the following script to the onchange event handler

crmForm.all.new_readonlystring.DataValue = crmForm.all.new_editablestring.DataValue;

- Save & publish the form

- Create a new_test record

- Enter a value in the Editable String field, e.g. “Creation String”

- Note that the ReadOnly string value is correctly changed on screen

- Hit save & close

- Open the record again, note that the “new_readonlystring” now contains a value “Creation String”

- Enter a new value in the Editable String field, e.g. “Edited String”

- Note that the ReadOnly string value is correctly changed on screen to “Edited String”

- Hit save & close

- Open the record again, note that the “new_readonlystring” still contains a value “Creation String” as it was correctly NOT updated

After some testing, it became clear that no permutation of programatically setting the field to Disabled, disabled, readOnly, ReadOnly or readonly made any difference. The only answer, it seems, is to prevent read-only attributes from hitting the database via a plugin. This is simple enough – the Create message accepts a Target input parameter which is a DynamicEntity. We can simply remove the unwanted properties from the DynamicEntity before the Target gets commited. This is difficult to manage though – every time you make a field read only on your form, you would need to recompile your code, or update your plug-in configuration strings (depending on how you chose to handle this). There is however a better solution – retrieve the form definition from the CRM platform, identify which fields should be read-only and omit them dynamically from the input target. And here is how this is done:

using System;

using System.Xml;

using Vitalogy.XRM.Common;

using Vitalogy.XRM.Plugins.BaseClass;

using Microsoft.Crm.Sdk;

using Microsoft.Crm.SdkTypeProxy;

namespace Vitalogy.XRM.Plugins

{

public class AllEntitiesCreate : VitalogyBasePluginClass, IPlugin

{

///

/// Identifies read-only fields from the form and ensures that they

/// are not saved to the database (this shouldn't happen anyway

/// but there appears to be a bug in the CRM platform)

///

/// <param name="context" />

public void Execute(IPluginExecutionContext context)

{

if (!PluginShouldRun(context))

{

DebugLog.LogMessage(string.Format("Plug-In \"{0}\" is not designed to run in this scenario", GetType().Name));

return;

}

DebugLog.LogStart();

try

{

var entity = (DynamicEntity)context.InputParameters.Properties[ParameterName.Target];

// Filter out read-only attributes from the create message

FilterReadOnlyAttributes(context, entity);

}

catch (System.Web.Services.Protocols.SoapException ex)

{

DebugLog.LogError(string.Format("A SOAP error occurred in the {0} plug-in.\n\n{1}", GetType(), ex.Detail), ex);

throw new InvalidPluginExecutionException(

String.Format("A SOAP error occurred in the {0} plug-in.", GetType()), ex);

}

catch (Exception ex)

{

DebugLog.LogError(string.Format("A general exception occurred in the {0} plug-in.", GetType()), ex);

throw new InvalidPluginExecutionException(

String.Format("A general exception occurred in the {0} plug-in.", GetType()), ex);

}

finally

{

DebugLog.LogFinish();

}

}

private static void FilterReadOnlyAttributes(IPluginExecutionContext context, DynamicEntity entity)

{

using (var crmService = context.CreateCrmService(true))

{

DebugLog.LogMessage("Plug-in to strip out read-only fields during record creation");

// get formxml

DebugLog.LogMessage(string.Format("Getting formXml for entity {0}", entity.Name));

// RetrieveFormXmlRequest is unsupported since it is for "internal use

// only" but the alternative involves getting the entity type code

// from metadataservice then issuing a request for OrganisationUI

// for the given entity. This too is deprecated due to not being

// supported in CRM 2011 - but what are we to do, eh?

var formXmlRequest = new RetrieveFormXmlRequest {EntityName = entity.Name};

var formXmlResponse = (RetrieveFormXmlResponse) crmService.Execute(formXmlRequest);

var formXml = formXmlResponse.FormXml;

DebugLog.LogMessage(string.Format("Retrieved formXml: {0}", formXml));

// xpath to identify read-only fields

var formXmlDocument = new XmlDocument();

formXmlDocument.LoadXml(formXml);

var disabledAttributes = formXmlDocument.SelectNodes("//control[@disabled='true']/@id");

// pluck them out of the create message

if (disabledAttributes == null)

{

DebugLog.LogMessage("No read-only attributes found");

return;

}

DebugLog.LogMessage(string.Format("Retrieved {0} read-only attributes", disabledAttributes.Count));

foreach (XmlNode disabledAttribute in disabledAttributes)

{

DebugLog.LogMessage(string.Format("{0} is read-only", disabledAttribute.Value));

if (entity.Properties.Contains(disabledAttribute.Value))

{

DebugLog.LogMessage("and has been removed from the Target input parameter");

entity.Properties.Remove(disabledAttribute.Value);

}

}

context.InputParameters[ParameterName.Target] = entity;

}

}

private static bool PluginShouldRun(IPluginExecutionContext context)

{

// only run if in the pre-Create stage

if (context.Stage == MessageProcessingStage.BeforeMainOperationOutsideTransaction)

{

if (context.InputParameters.Properties.Contains(ParameterName.Target) &&

(context.InputParameters.Properties[ParameterName.Target] is DynamicEntity))

{

return context.MessageName == MessageName.Create;

}

return false;

}

return false;

}

}

}

A few things to note:

- Vitalogy.XRM.Common and Vitalogy.XRM.Plugins.BaseClass are simply used to implement the log4net logging framework (and hence define DebugLog).

- This implementation is very simplistic so isn’t exactly efficient – the next step would be to cache the list of read-only fields so that it is not being read at every Create

- This code can only deal with fields defined as “ReadOnly” in the form definition. If you have client-side onLoad script which disables fields onLoad this code will not identify those fields (though if you’ve coded consistently, you might be able to examine your client-side code within the formXml to find these cases…).

My environment

I encountered the aforementioned issue in the following conditions:

- Combined Application and Database server

- Microsoft Dynamics CRM 4.0 – Update Rollup 14

- Microsoft Windows Server 2008 R2 Standard

- Microsoft SQL Server 2008 SP1 (64 Bit)

- Various clients, including:

- Internet Explorer 7 (7.0.5730.13) from client

- Internet Explorer 8 (8.0.7600.16385) on server

- When signed in as a CRM Administrator or non-administrative user

Campaign Response – required privileges (or “how to hide”)

I’ve only written the title and I’ve already over-promised…

So you want to suppress the Campaign Response activity but don’t want to start hacking at the CRM files. What options have you got? Very few, to be honest.

Entity visibility is driven via privileges and by turning off access to the right parts of the system, most things can be hidden. The problem comes with the side-effects. Since Campaign Response does not have an explicit permission-set in its own right, it is not obvious as to what needs to be changed to hide it from users. Here’s the solution – you can choose to remove access to either:

- Activity : [Append] AND Campaign : [AppendTo]

- OR Activity : [AppendTo]

By removing access to one of these two groups in the appropriate role(s), Campaign Activity will cease to be shown to all users that are subject to that role (assuming that they don;t have additional roles that grant these permissions). It’s a bit limiting though since without “Activity : AppendTo”, you’re not going to be able to add Notes or attachments to an Activity and without Activity : Append, you won’t be able to set Regarding…

I didn’t say that this would be helpful!

Which privilege failed?

A quick tip that might come in handy if you start editing roles and permissions in your CRM deployment. Often when I start to define completely custom roles, especially when using a brand new role with no existing values, I will encounter an error message when testing the role:

Error Description:

0x80040220 SecLib::CheckPrivilege failed. Returned hr = -2147220960, User: 00000000-0000-0000-0000-000000000000, PrivilegeId: a3311f47-2134-44ee-a258-6774018d4bc3 PlatformError Details:

0x80040220

SecLib::CheckPrivilege failed. Returned hr = -2147220960, User: 00000000-0000-0000-0000-000000000000, PrivilegeId: a3311f47-2134-44ee-a258-6774018d4bc3

Platform

So what do I need to change to make this work? MSCRM isn’t being particularly helpful with this error message, so here’s how to make it make sense. Run the SQL script below against your [Organization]_MSCRM database. Replace the GUID in the query below with the PrivilegeId GUID in the error message:

select [Name] from dbo.PrivilegeBase where privilegeid = 'a3311f47-2134-44ee-a258-6774018d4bc3'

You should get a single result back, like ‘prvReadEntity’ (Read access required for “Entity” which is found on the Roles matrix under the Customization tab, Entity row, Read column) or ‘prvCreateUserSettings’ (Business Management tab, User Settings row, Create column). As you can see, the Name of the privilege should give you the pointer needed to set the required permissions.

You are not a Deployment Administrator

We’ve all seen this error at some point when trying to access the Microsoft Dynamics CRM Deployment Manager on the server:

Only the Deployment Administrators are able to use Deployment Manager. You are not a Deployment Administrator.

Most of the time this is not a problem at all – perhaps you’re logged in with the wrong account or you simply need to request that another administrator logs in and adds you to this list. Sometimes it can be trickier though – perhaps the only administrator is on holiday, has left (and had their account deleted rather than just disabled) or any other number of things that might just ruin your day. The only supported method at this point is a reinstall of CRM. Cripes…!

How about an unsupported method to get you by?

Try this little SQL script which should add you to the list of Deployment Administrators. It is ENTIRELY UNSUPPORTED and probably shouldn’t be used in a production environment etc etc. But needs must and this might just save your day (but don’t blame me if I make it worse, right?).

IMPORTANT NOTE: This script will get you into the Deployment Administrator, but if you were NOT already a CRM user, it won’t get you into the CRM front-end. Clearly something missing from my script at present – be very very careful if you use this in production…

USE MSCRM_CONFIG

DECLARE @username AS VARCHAR(MAX) ,

@newuserguid AS uniqueidentifier ,

@userguid AS uniqueidentifier ,

@adminroleguid AS uniqueidentifier

-- *** TODO: ADD THE USERNAME YOU WANT TO MAKE A DEPLOYMENT ADMINISTRATOR HERE

SET @username = '<domain>\<username>'

SET @newuserguid = NEWID()

-- Get guid for the Deployment Admin role

SELECT @adminroleguid = id

FROM dbo.securityrole

WHERE Name = 'Administrator'

-- Add the user as a systemuser (unless they already exist...)

IF NOT EXISTS

(SELECT ID

FROM SYSTEMUSER

WHERE NAME = @username

)

BEGIN

INSERT

INTO SYSTEMUSER VALUES

(

'00000000-0000-0000-0000-000000000000',

@newuserguid ,

@username ,

0

)

END

-- Get the user's guid

SELECT @userguid = ID

FROM SYSTEMUSER

WHERE NAME = @username

-- Add the user's guid and the admin role to the SystemUserRoles table

INSERT

INTO dbo.SystemUserRoles VALUES

(

NEWID() ,

@adminroleguid,

@userguid ,

NULL ,

0

)

We also may need to add the record to the appropriate AD groups and CRM tables. I’m not going to show you how to add items to Active Directory Groups or which groups to use. Suffice to say, you would need to know the user’s Active Directory sid (Security ID). Try this command from the server prompt:

dsquery user -name Greg* | dsget user -sid

This should return a result similar to this:

sid

S-1-5-21-0123456789-0123456789-0123456789-1234

dsget succeeded

You’re on your own from this point… 😉

Dates in FetchXml queries

Another quick post. A colleague was trying to execute a FetchXml statement that included a filter on a date field but couldn’t understand why he was not returning the expected results (indeed, wasn’t returning any results).

At first glance, the query looked fine:

<fetch mapping="logical"> <entity name="new_entity"> <attribute name="new_entityid"></attribute> <filter type="and"> <condition attribute="new_linkentityid" operator="eq" value="f25f4cee-e851-df11-bebe-000c29a1faa8"></condition> <condition attribute="new_date" operator="eq" value="2010-04-30"></condition> </filter> </entity> </fetch>

The link entity was definitely correct and the date field certainly read 30th April 2010. The date field was also defined as Date Only in the attribute definition.

It transpired that the problem was (predictably) linked to times. CRM stores all dates in UTC format () so even if the attribute is defined as “Date Only” in the entity definition, 30th April 2010 will actually be stored as 2010-04-30T00:00:00. For some reason, which I haven’t yet determined for certain (there are a couple of theories that i don’t have time to test), the record he was seeking was dated 2010-04-30T08:00:00. It may be due to his time zone, since he was working in the US at the time of the problem. It may be that this attribute was previously a Date AND Time attribute and then got changed. Either way, the simplest solution was to use a different FetchXml operator – replacing the “eq” operator in the “new_date” condition with the “on” operator thereby ignoring times:

<fetch mapping="logical"> <entity name="new_entity"> <attribute name="new_entityid"></attribute> <filter type="and"> <condition attribute="new_linkentityid" operator="eq" value="f25f4cee-e851-df11-bebe-000c29a1faa8"></condition> <condition attribute="new_date" operator="on" value="2010-04-30"></condition> </filter> </entity> </fetch>

A list of FetchXml operators can be found on MSDN